SIID Technologies

An AI-empowered evidence tool that introduced me to machine-learning in AWS and setting realistic expectations with founders.

Architecture:

Launch date: July 2023

Live at: siid.ai

Role: Lead developer, UX designer, systems architect

CONTEXT

SIID leverages the power of AWS to transcribe audio & video files and produce insights to aid in the evidence discovery process. This was one of the most complex and exceptionally challenging projects in my career, not just due to the complexity of the tool but the nature of testing with graphic police body cam footage. This was my first project as the Development Lead at Platform; all aspects of the product timeline, feature prioritization and build were my responsibility. The level of fidelity that we were able to achieve in an 8 week development window was impressive, but that was not without its shortcomings.

My original mockups were very high fidelity. I wanted law offices to be able to collaborate and have all of their info on one page. Originally, we wanted everything visible at one time. Given that the case files are often quite large, I realized quickly that it was not in the users' best interests to have everything on display. Thus, we opted for a tab-based right hand column and put more emphasis on descriptive iconography.

Another large change we made was removing sentiment analysis from the product; this evaluated the overall tone of the video or audio file using an AI-model. The founder felt very strongly that this was essential for launch, but I was worried that it would not add any value to the tool at this stage. With the simplicity of our ML-model, we were only able to generate positive, negative and neutral sentiment for each part of the transcript. As you can assume for police body cam footage, 99% of sentiment was negative.

CHALLENGES

The biggest mistake I made during this build was weak expectation-setting. We created a beautiful wireframe prototype in Figma and did not communicate proper expectations to the founder regarding feasibility. Since Platform used experimental development frameworks such as Bubble.io, and this was my first time experimenting with AWS, we couldn't 100% validate all of the designs right away. In the end, the product resembled the prototype pixel for pixel; the issue was that we couldn't create as sophisticated of a machine-learning pipeline that the founder had expected at launch.

With the understanding that our smart analysis would be simplified for launch, I focused on organizing data and improving the speed and collaborative nature of the tool. We wanted this to be the best budget evidence discovery option on the market. However, there are still many different ways to skin a cat. I found myself frequently saying "just because we can do this doesn't mean we should". One of our experiments was generating a wordcloud for users based on commonly repeated words or phrases.

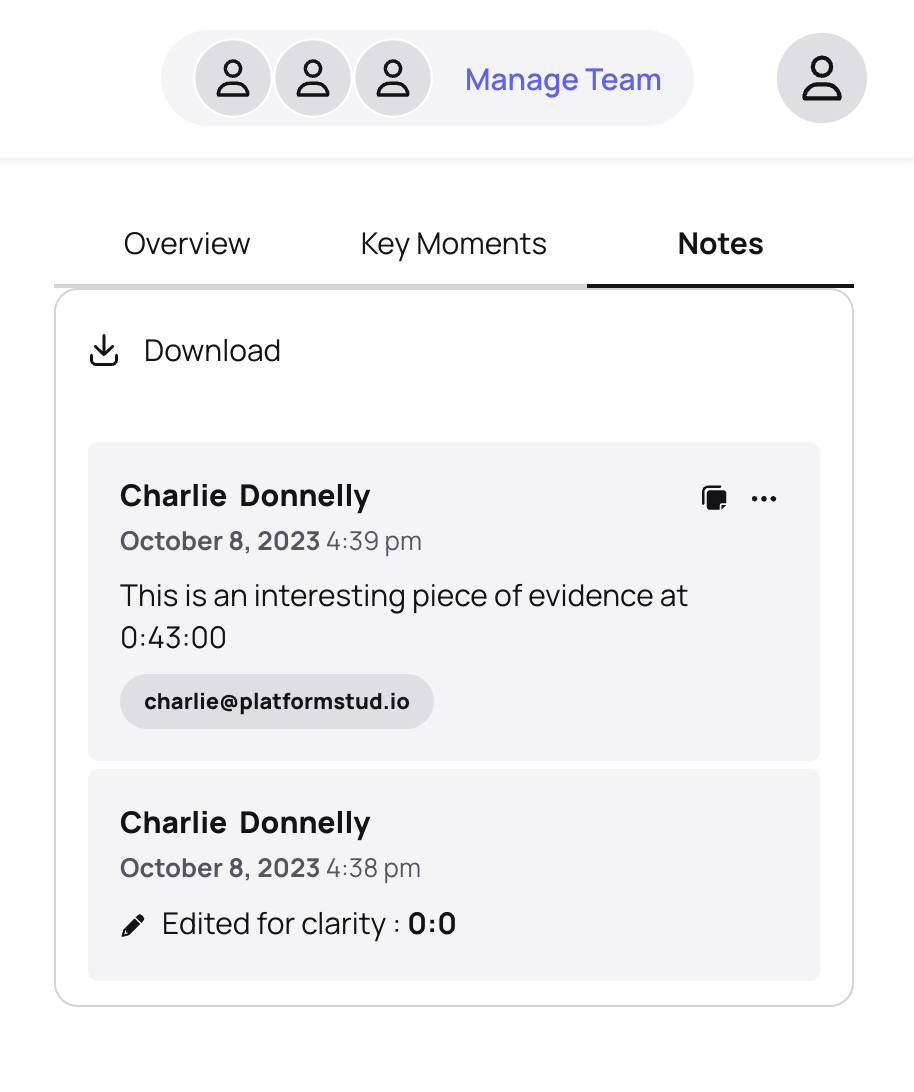

Another challenging component of SIID was collaboration. Since this is a legal tool, security is one of the most critical components (as much as I'd like to be subpoenaed). We designed the collaboration to mimic google drive file sharing, comments, edit history and tagging.

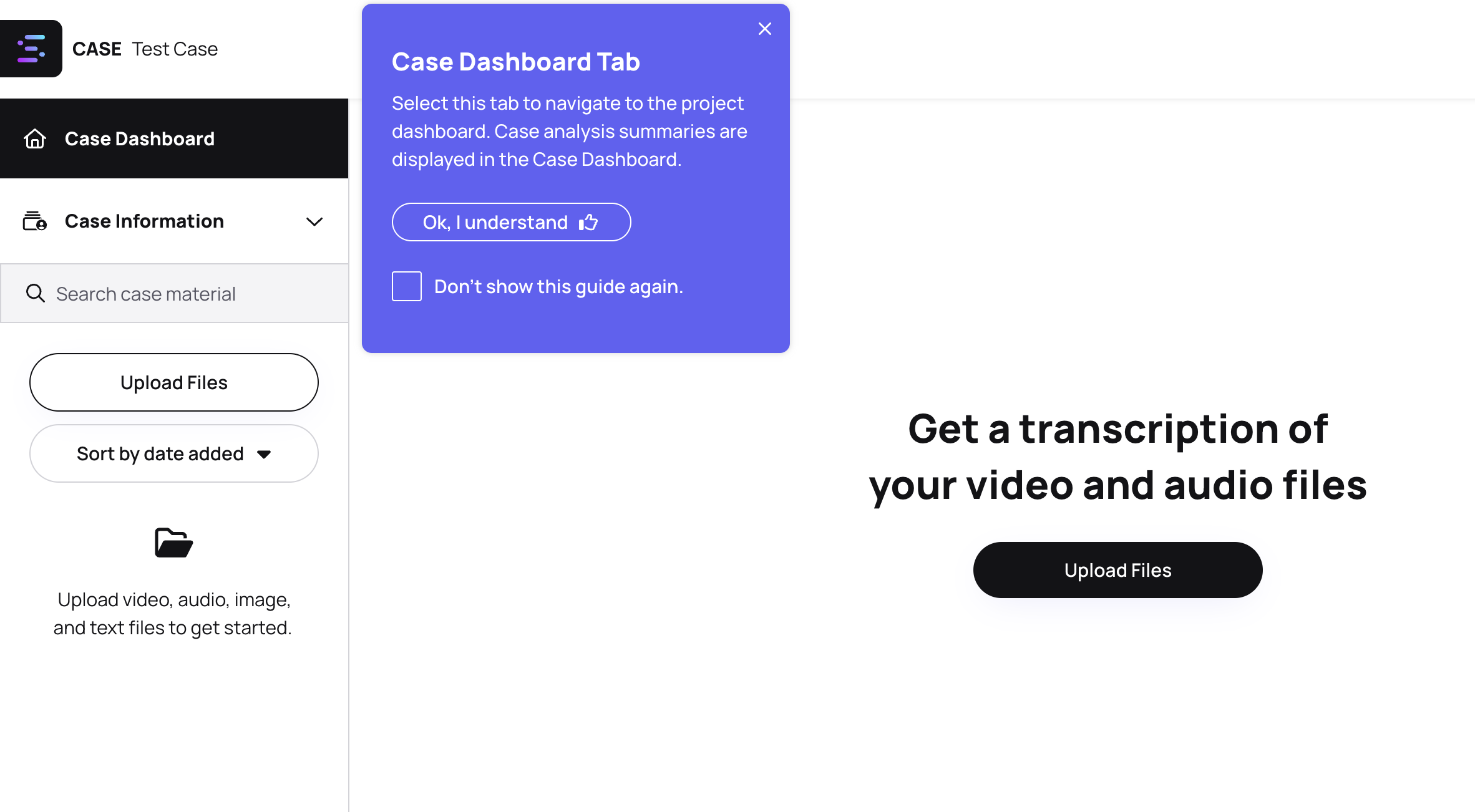

SIID was an excellent lesson in UX Writing and clarity. We were creating a tool so dense and complex for an analog industry hesitant to adopt new technology. This meant that our language needed to be clear, familiar and simple. Self-guided tutorials, tooltips and iconography were our biggest assets when designing the UX.

The SIID pricing structure also underwent numerous iterations, based both on user feedback but also technical limitations. One of the challenges was explaining the different tiers without users knowing exactly what their workflow would look like. By offering trials to all of our beta firms, we were able to test all of our different billing structures and make tweaks as needed.

We wanted users to be able to see the original transcript and any edits they had made to it, as well as a history of all of those edits and who made them. Since we were time-constrained, my solution was to create an instance of that edit and mark it in the project notes so that the users could navigate to that instance and see the change.

Post-launch I was given the opportunity to spend more time on this feature. Rather than simply stating that a transcript was edited, the founder needed to see a history of these changes. Rather than reinventing the wheel, I looked for an existing product that leveraged this capability, and Google spellcheck/search history happened to offer an open-source API for this feature.

As you can see, our final edit history feature allows the user to engage with edits in multiple ways. A team member can view a log of the edit, navigate instantly to that section of the transcript, few a visual history of the edits, and even few that transcript block's engagement history from the block itself.

The Google algorithm compares different groups of texts and highlights the differences between them, and we were able to store these values as edit histories and denote the differences with color (red for removed, purple for added).

LEARNINGS

To this day, this is the most advanced MVP I have crafted in my career. Since launch we have poured so much time and energy into the product that it is now in an exceptionally stable state. The mistakes I made during the development process were critical to my growth and success with our subsequent builds. I have learned to prioritize my relationship with the founder and properly set expectations. As a young developer, I tried to bite off more than I could chew, but I am a better leader as a result.